MIT – The way we evaluate the performance of other humans is one of the bigger mysteries of cognitive psychology. This process occurs continuously as we judge individuals’ ability to do certain tasks, assessing everyone from electricians and bus drivers to accountants and politicians. The problem is that we have access to only a limited set of data about an individual’s performance—some of it directly relevant, such as a taxi driver’s driving record, but much of it irrelevant, such as the driver’s sex. Indeed, the amount of information may be so vast that we are forced to decide using a small subset of it. How do those decisions get made? Today we get an answer of sorts thanks to the work of Luca Pappalardo at the University of Pisa in Italy and a few pals who have studied this problem in the sporting arena, where questions of performance are thrown into stark relief. Their work provides unique insight into the way we evaluate human performance and how this relates to objective measures.

In recent years, the same players have also been evaluated by an objective measurement system that counts the number of passes, shots, tackles, saves, and so on that each player makes. This technical measure takes into account 150 different parameters and provides a comprehensive account of every player’s on-pitch performance. The question that Pappalardo and co ask is how the newspaper ratings correlate with the technical ratings, and whether it is possible to use the technical data to understand the factors that influence human ratings.The researchers start with the technical data set of 760 games in Serie A in the 2015-16 and 2016-17 seasons. This consists of over a million data points describing time-stamped on-pitch events. They use the data to extract a technical performance vector for each player in every game; this acts as an objective measure of his performance.

The researchers also have the ratings for each player in every game from three sports newspapers: Gazzetta dello Sport, Corriere dello Sport, and Tuttosport.

The newspaper ratings have some interesting statistical properties. Only 3 percent of the ratings are lower than 5, and only 2 percent higher than 7. When the ratings are categorized in line with the school ratings system—as bad if they are lower than 6 and good if they are 7 and above—bad ratings turn out to be three times as common as good ones.

In general, the newspapers rate a performance similarly, although there can be occasional disagreements by up to 6 points. “We observe a good agreement on paired ratings between the newspapers, finding that the ratings (i) have identical distributions; (ii) are strongly correlated to each other; and (iii) typically differ by one rating unit (0.5),” say Pappalardo and co.

To analyze the relationship between the newspaper ratings and the technical ratings, Pappalardo and co use machine learning to find correlations in the data sets. In particular, they create an “artificial judge” that attempts to reproduce the newspaper ratings from a subset of the technical data.

This leads to a curious result. The artificial judge can match the newspaper ratings with a reasonable degree of accuracy, but not as well as the newspapers match each other. “The disagreement indicates that the technical features alone cannot fully explain the [newspaper] rating process,” say Pappalardo and co.

In other words, the newspaper ratings must depend on external factors that are not captured by the technical data, such as the expectation of a certain result, personal bias, and so on.

To test this idea, Pappalardo and co gathered another set of data that captures external factors. These include the age, nationality, and club of the player, the expected game outcome as estimated by bookmakers, the actual game outcome, and whether a game is played at home or away.

When this data is included, the artificial judge does much better. “By adding contextual information, the statistical agreement between the artificial judge and the human judge increases significantly,” say the team.

Indeed, they can clearly see examples of the way external factors influence the newspaper ratings. In the entire data set, only two players have ever been awarded a perfect 10. One of these was the Argentine striker Gonzalo Higuaín, who played for Napoli. On this occasion, he scored three goals in a game, and in doing so he became the highest-ever scorer in a season in Serie A. That milestone was almost certainly the reason for the perfect rating, but there is no way to derive this score from the technical data set.

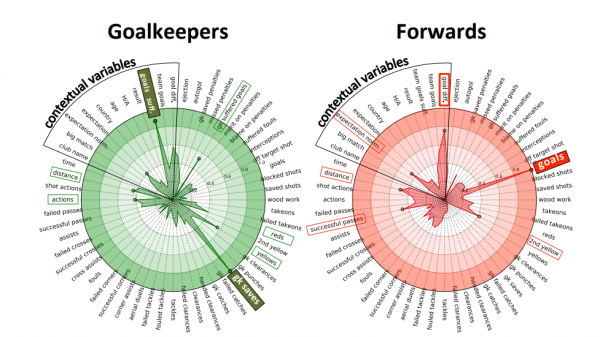

An important question is what factors the artificial judge uses to match the newspaper ratings. “We observe that most of a human judge’s attention is devoted to a small number of features, and the vast majority of technical features are poorly considered or discarded during the evaluation process,” say Pappalardo and co.

So for attacking forward players, newspapers tend to rate them using easily observed factors such as the number of goals scored; they rate goalkeepers on the number of goals conceded. Midfield players tend to be rated by more general parameters such as the goal difference.

That makes sense—human observers have a limited bandwidth and are probably capable of observing only a small fraction of performance indicators. Indeed, the team say the artificial judge can match human ratings using less than 20 of the technical and external factors.

That’s a fascinating result that has important implications for the way we think about performance ratings. The goal, of course, is to find more effective ways of evaluating performance in all kinds of situations. Pappalardo and co think their work has a significant bearing on this. “This paper can be used to empower human evaluators to gain understanding on the underlying logic of their decisions,” they conclude

La mise en réseaux constante des internautes à travers le Web, les médias sociaux, les objets connectés (mais aussi le Dark Web et autres zones moins connues) coïncide avec une tendance sociétale, notamment dans les pays occidentaux, où la désagrégation de la confiance entre les autorités traditionnelles et les citoyens ne cesse de se creuser. Depuis des années, le Blog du Communicant en fait d’ailleurs régulièrement état, grâce en particulier au remarquable outil qu’est l’Edelman Trust Barometer qui en est à sa (bientôt) 18ème édition. Or, la rencontre de cette confiance toujours plus ébréchée et cette capacité technologique toujours plus amplifiée à diffuser (ou s’emparer) des données (vraies ou fausses selon l’objectif) a largement modifié les rapports de force entre d’un côté, les institutions, les entreprises, les experts, les médias classiques et de l’autre, de nouveaux agents propagateurs aux visages divers mais bénéficiant d’un regain de confiance supérieur de la part des internautes. Ainsi, fera-t-on plus facilement crédit aux commentaires de Trip Advisor qu’aux critiques de revues spécialisées dans le tourisme ou la gastronomie.

La mise en réseaux constante des internautes à travers le Web, les médias sociaux, les objets connectés (mais aussi le Dark Web et autres zones moins connues) coïncide avec une tendance sociétale, notamment dans les pays occidentaux, où la désagrégation de la confiance entre les autorités traditionnelles et les citoyens ne cesse de se creuser. Depuis des années, le Blog du Communicant en fait d’ailleurs régulièrement état, grâce en particulier au remarquable outil qu’est l’Edelman Trust Barometer qui en est à sa (bientôt) 18ème édition. Or, la rencontre de cette confiance toujours plus ébréchée et cette capacité technologique toujours plus amplifiée à diffuser (ou s’emparer) des données (vraies ou fausses selon l’objectif) a largement modifié les rapports de force entre d’un côté, les institutions, les entreprises, les experts, les médias classiques et de l’autre, de nouveaux agents propagateurs aux visages divers mais bénéficiant d’un regain de confiance supérieur de la part des internautes. Ainsi, fera-t-on plus facilement crédit aux commentaires de Trip Advisor qu’aux critiques de revues spécialisées dans le tourisme ou la gastronomie. Aux yeux du militaire largement rompu aux conflits numériques que le Comsic surveille au grain, trop nombreuses sont encore les organisations à disposer d’une culture Web déficiente. Ce qui les conduit bien souvent à minorer les risques, sous-investir en ressources humaines, techniques et budgétaires … jusqu’au jour où la catastrophe survient. Souvenez-vous du piratage gigantesque de Yahoo il y a quelques années ! Acteur pourtant placé mieux que quiconque pour embrasser cette dimension, le pionnier du Web s’est proprement fait dévaliser ses données avant de l’admettre piteusement des années plus tard et de finalement disparaître de la scène industrielle, la marque étant tellement discréditée (même si d’autres critères expliquent aussi la chute de Yahoo).

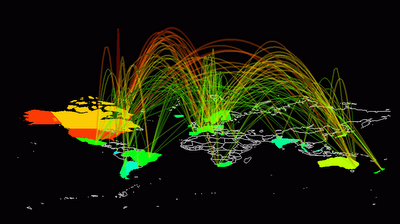

Aux yeux du militaire largement rompu aux conflits numériques que le Comsic surveille au grain, trop nombreuses sont encore les organisations à disposer d’une culture Web déficiente. Ce qui les conduit bien souvent à minorer les risques, sous-investir en ressources humaines, techniques et budgétaires … jusqu’au jour où la catastrophe survient. Souvenez-vous du piratage gigantesque de Yahoo il y a quelques années ! Acteur pourtant placé mieux que quiconque pour embrasser cette dimension, le pionnier du Web s’est proprement fait dévaliser ses données avant de l’admettre piteusement des années plus tard et de finalement disparaître de la scène industrielle, la marque étant tellement discréditée (même si d’autres critères expliquent aussi la chute de Yahoo). Directeur d’Aeneas Conseil et Sécurité, Serge Lopoukhine est familier de l’intelligence économique. Avec l’avènement des technologies numériques, il est au premier rang pour constater que le risque n’est plus un épiphénomène médiatique ou réservé à une catégorie d’acteurs comme l’armement, l’espionnage et autres secteurs discrets. Pour 2016, il mentionne le chiffre de 4500 attaques cyber en France soit une moyenne de 2,2 par jour. Certaines sont vite repérées et jugulées mais d’autres peuvent avoir une onde tellurique puissante tant les outils à disposition relèvent désormais de l’inventaire à la Prévert (bien que les petits noms n’aient rien de francophones !) : botnets, malwares, ransomwares, vers, spywares, sockpuppets, DDOS, astroturfing et la liste est loin d’être close.

Directeur d’Aeneas Conseil et Sécurité, Serge Lopoukhine est familier de l’intelligence économique. Avec l’avènement des technologies numériques, il est au premier rang pour constater que le risque n’est plus un épiphénomène médiatique ou réservé à une catégorie d’acteurs comme l’armement, l’espionnage et autres secteurs discrets. Pour 2016, il mentionne le chiffre de 4500 attaques cyber en France soit une moyenne de 2,2 par jour. Certaines sont vite repérées et jugulées mais d’autres peuvent avoir une onde tellurique puissante tant les outils à disposition relèvent désormais de l’inventaire à la Prévert (bien que les petits noms n’aient rien de francophones !) : botnets, malwares, ransomwares, vers, spywares, sockpuppets, DDOS, astroturfing et la liste est loin d’être close.